My experience and thoughts on block 3 of MDS at UBC. The courses were: DSCI 513, DSCI 522, DSCI 561, and DSCI 571.

Introduction to Block 3

This post comes to you after finishing block 3 of MDS, the final block of 2018 and the 3rd installment in this blog series. If you haven’t already, check out my post about the 2nd block of this degree so far.

What a month it was! I think I speak for everyone in the program and say this was the most intellectually challenging yet gratifying work in the degree thus far. Quite simply, you can start to see the power behind what you are learning in classes like Supervised learning (DSCI 571) and Workflows (DSCI 522) and the importance of having instructors curate content that understand it deeply and know what you need to focus on and what you can learn on your own or ignore. Any student that went through this block can tell you about the basics of linear regression, how relational and semi-structured databases work, how to apply different machine learning algorithms for different problems and the pros/cons of each, as well as how to automate scripts that span the analysis process (wrangling, visualization, analysis and communication). It felt like we were starting to dig into the material that brought me here in the first place, and the content that was the real value I could offer an employer on the other side of this master’s program. Without further delay, each class from block 3.

Supervised Learning (DSCI-571: Dr. Mike Gelbart)

Heading into this class I was a bit intimidated looking at the material. Anyone with an interest in data science has heard of sci-kit learn, machine learning, overfitting and underfitting, but I also knew these could be incredibly complex concepts that were not easy to unpack…. let alone in 4 weeks. To my surprise, I learned more in this class than any other I’ve taken in this degree, hands down. Mike Gelbart delivered a “flipped” classroom style course where before each lecture we were asked to watch a video on a specific machine learning classifier that we would then cover in lecture. The opportunity to absorb information prior to lecture was a huge help in scaling my learning, and allowed me to go deeper into the concepts we covered in lecture as a result. Mike’s examples and notes were extremely clear and helpful, and when paired with the labs I could see the power of the sci-kit library and how many of the classifiers could be applied to a range of datasets from music rankings (Spotify), voting patterns in political elections (Democrat vs Republican), to natural language processing (IMDB Movie Reviews), just to name a few. We also covered critical concepts like cross-validation, balancing your training and test error and how determining the ‘accuracy’ of a given model isn’t always as straight-forward as it may seem. Any student leaving this class is capable of: applying machine learning algorithms with the fundamental trade-off of selecting hyperparameters kept in mind, and understanding what algorithms may be best for classification or regression problems.

There is so much to learn in this area and while it feels like we’ve still scratched the surface on how different algorithms actually work underneath the hood (math and assumptions represented as code within each function) and perform under certain conditions with different kinds of data, it taught me that these topics don’t have to be intimidating to get into. In fact, if you focus on a high-level understanding of what’s happening when an algorithm is fit to data and predicts on new data and consider WHY some algorithms may be more effective than others at this process, you can walk away with a great foundation of machine learning fundamentals. For anybody whose new to concepts in data science, there can be a sense of fear in students when confronting machine learning courses and understandably so because they are indeed complex topics. While there are a plethora of materials available out there to learn on your own and it has never been easier, what really made MDS worth it is to know what to learn within ML, in what order to best digest the info, and to have someone take you through simple examples of how it can be applied and present important concepts to you in a way that are gradual to help build your intuition and grasp of the information.

With that in mind, I have to thank Mike and Varada (Head TA) for designing this curriculum in a way that was extremely digestible. I had heard rumours that DSCI 571 was quite difficult for the previous cohort and I think they both took this to heart in designing the curriculum and labs this year, hats off to them. I also consistently got the impression they cared about the success of students in the course, and would do whatever is needed to help you understand concepts whether its extending or adding office hours, recommending new materials or addressing slack questions promptly.

For any future students, coursework like this is likely a core reason why you are applied to MDS, and I can happily say it is also where you will feel like you’re growing the most in this degree and I’m glad I feel I made the most of this opportunity.

Data Science Workflows (DSCI-522: Dr. Tiffany Timbers)

This class was interesting because it was the first of it’s kind up until now for this year’s MDS cohort. While I had heard issues raised from some students in my cohort about fixed group work for the entirety of the course, I found it to be a great experience with my group partner. This field is an extremely collaborative one, and I know I valued the experience of working through both technical and social roadblocks with a partner. The group work was one unique aspect but it also did not have any exams or labs, only project deadlines each week that asked us to apply what we had learned in lecture regarding script automation and containerization to our project. My partner and I chose an NBA dataset where we applied our supervised learning skills (decision tree classifier) to try and predict whether a shot would be made or missed by Lebron James, and automate our wrangling, exploratory visualization and analysis process. It was an interesting blend of many of the skills we acquired in block 2 regarding visualizations, wrangling as well as the block 3 machine learning skills we cultivated.

The real focus of the course wasn’t reiterating these skills that we had already acquired though, it was using tools like Make and Docker to automate these processes and encapsulate them in containers that are reproducible across platforms. I intend on applying them to all of my projects in the future since once the framework is built they can deliver faster, more flexible results. For instance, my partner had an ingenius idea to take our project a step further in automating our scripts based on a keyword argument so that when we ran the scripts and provided a ‘player name’ argument, you could run the full report for any player in the NBA you wanted, not just Lebron James. Now you can wrangle, visualize, and analyze characteristics of shots from any player in the NBA and compute these scripts in under a few seconds. Amazing!

My hope is that in the future I can use Make and Docker to build more complex and flexible scripts than the project we built, perhaps a larger analysis pipeline to address questions that interest me and allow me to come back and run new ideas through a pipeline that I think may be interesting. Once the pipeline is built, as long as you are feeding it data that is structured to fit your code, you can generate results in very little time and that seems like a valuable investment for future work with similar datasets and approaches. I highly recommend becoming acquainted with script automation and firmly stand by the belief that it is worth it to invest yourself in this class. If you’re working within a larger company when you graduate, there is a very good chance that they will have large pipelines in place already, and you will be asked to take advantage of these in your work. It will save you a ton of time and energy, and you’ll be more productive in the long run. So dive in!

Tiffany’s teaching style really shines through in courses where there’s a lot of in-class coding and actionable instructions to follow in lecture. Her strength as an instructor is taking various packages and platforms that are complex and showing students in a step-wise fashion how the basics actually work. She is great at helping you realize this doesn’t have to be so complicated. Every lecture Tiffany also gives us a 5-minute break and plays music, which was an unexpected but greatly appreciated mental break. I know she is mindful of our workload and stress levels, even with the little things like this. Some students had issues with their partner or some aspect of the group work but for myself, it was a great experience where I could learn lots from both Tiffany and my partner but also not sweat looming deadlines of labs or exams for the class. This helped with balancing labs and exams for the other classes as well.

For anyone interested in the NBA project my partner and I worked on for this class, it can be found on GitHub here: Lebron James for T(h)ree!

Regression I (DSCI-561: Dr. Gabriela Cohen-Frue)

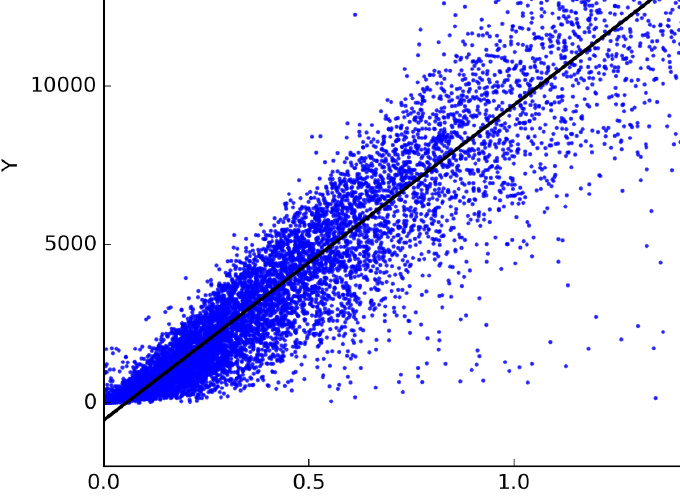

Heading into this class, I knew exactly what to expect and that it wouldn’t be easy for me. I had encountered linear regression in one of my past positions in mental health research, but admittedly, did not fully understand the ins-and-outs of what I was assuming and had generally struggled through statistics classes in my undergrad. Undoubtedly, I worked the hardest in this class to understand the material, but I can only imagine how much harder it would have been if Gabriela had not been our instructor. I thought this class moved at a reasonably slow pace, where we really focused on the core components of simple and multiple linear regression across 7 of the 8 weeks. Gabriela re-iterated many themes in this class at both the theoretical level with respect to the thinking behind concepts such as cell-means versus reference-treatment parameterization, errors vs residuals and least squares fitting, but also how this was expressed in R’s output.

Ultimately as data scientists we will be working with a programming language that computes many of these statistical measures, and so the burden of understanding falls more on the side of using R’s functions and interpreting their output than explicitly calculating the measures and remembering formulas. I thought in general she balanced these demands quite well, but think she could stray a bit further from the calculus aspects of how certain measures are calculated and get back to R. I really wanted a deeper discussion of the direct R output and the higher-level forms of the concepts we were discussing like multicollinearity, and the coefficient of determination, among others. With only 4 weeks to learn regression spending a lecture or two on the calculus behind formulas simply isn’t productive in my opinion. However, in general she is a wonderful instructor with an intimate understanding of statistics that can’t be understated. Ask a ton of questions in this class, I promise you’re not alone in wondering what that standard error formula means, how to manage outliers, or different forms of fitting regression models. This is no fault of the instructor, statistics is a slippery fish of knowledge that takes a lot of effort and attention to not only understand but apply properly. The more questions you ask and the more you can remain patient in your learning, the firmer the grasp you will have on this statistical fish.

I thought Vincenzo (TA) did a solid job with the lab design as well, gradually building our intuition of how regression truly worked while challenging us to consider what the output of lm vs anova vs aov really meant. Overall, this course was a pleasant surprise given my past struggles in statistics. Gabriela and Vincenzo will help you see the value in understanding these concepts as they will undoubtedly arise in an analytics career. Examining relationships between data, fitting linear models and making predictions about future observations are all critical skills in an analysts toolbox, choose to cultivate them!

Databases and Data Retrieval (DSCI-513: Bhav Dhillon)

Heading into this class I had no experience with SQL or really any relational or semi-structured databases and while I did finish with a reasonable understanding of the materials, this class left a fair bit of room for improvement. A common trend I am noticing within the degree is that the classes with instructors who are furthest removed from the computer science/MDS/statistics faculty tend to be the worst from an educational standpoint. I don’t necessarily say this with respect to their ability as an instructor because I don’t think that’s the case at all, in fact they have been very talented and driven instructors. What I mean is it’s harder to integrate material from the lecture into the labs and draw from past successes in other classes that may be useful for the instructor if they communicate less with the core faculty that curates the overall curriculum. This gap in communication reflected itself in general student engagement, and concept acquisition in DSCI 513. The instructor was a post-doc brought in to teach DSCI-513 and who was not embedded within the overarching educational picture of the program and was not familiar with the content we had already learned that may inform their teaching (ex. inner joins, select, etc.). Bhav was brought in to teach this specific class and the lectures were quite poorly laid out with respect to how they integrated with labwork, plain and simple. That said, she was kind, knowledgeable and always available for help and was much more effective one on one with students I found. Again, I don’t think it’s a reflection of her abilities at all, simply an organizational critique. The overarching concepts were laid out by Bhav and while the early lectures seemed to confuse her at times along with the students, by the end they were clear and more useful given that we hadn’t encountered the later concepts in previous courses.

Every student can tell you about primary and foreign keys in databases, and ER diagrams for different forms of relationships between information. That said, I can’t help but feel like this class still left lots to be desired. It should have been what data wrangling (DSCI-523) was but with SQL/JSON and it just didn’t live up to that standard. An additional issue was having to use SQLite, which is not really a common language in the working world despite it’s syntactical similarities to MySQL, the more common language. Again, the reason for this is UBC does not have an infrastructure in place for relational databases so we were forced to use SQLite. In my opinion, organization would go a long way in improving this class not necessarily changing the instructor or the material itself per se.

Without being a huge downer, I still learned lots from this class, and Rodolfo (TA) challenged us in the labs which forced me to get creative with my learning. The majority of my breakthroughs in lab work came through trial-and-error and referencing alternative resources beyond lecture notes to develop the necessary understanding to get through difficult questions. Having to go that extra mile elevated my thinking around how to work with databases, the best ways to design them given particular constraints, and I improved drastically at visualizing different kinds of joins between separate tables! So I guess I have that going for me. In sum, I hope for next year they find ways to improve upon this course structurally, and increase the communication between core faculty and the course instructor regarding what concepts we may have already covered. I still was able to learn a ton about databases, it just took more energy given the constraints I’ve mentioned but if you’re motivated, you’ll be just fine.

Closing Thoughts & Suggestions

Block 3 was by far the most action packed block regarding the density of material and the most exciting given the power of what we were learning. Generally speaking I have great things to say about the teaching staff in this block, I know far more than I did a month ago and don’t see myself forgetting the core materials in each class because they were so fundamental. Linear models, machine learning algorithms for classification and regression, how relational databases work via keys and constraints, and script automation are such valuable bodies of knowledge for a data scientist, analyst or really anyone working with data. Aside from some structural issues with one course and the recurring fact that I just don’t have enough time to go into everything that intrigues me, this was a great 4 weeks.

Additional Ideas/Recommendations

One idea that came to me while working in this block is for the wrangling and database classes or really any class that requires more technical skill is to provide a ‘puzzle dataset’ to students at the start of the block that has various manipulations and is considered ‘untidy’. As students learn the skills for a course (perhaps multiple courses) they will be able to apply it to this puzzle dataset and at the end possess all the skills to actually solve it. They can then reference a key that the TA has, and it provides some optional work for students to get practice and tackle optional problems. Obvious classes where this can be implemented are 523 with R (dplyr) and 513 (SQL) but any course where instructors feel they can offer a big picture assignment that students can tackle might be a nice addition to the regular lab work. This could be extended to questions where a dataset must be wrangled and a linear model must be fitted to some portion of the data once it is tidied, incorporating multiple classes and areas of understanding to resolve the problem. It also comes at very little cost to the TA’s because the head TA is designing databases to be worked with already by the students, and provides one more large project the students can grade themselves by comparing with a key.